Overview

Welcome to the ICML 2024 Workshop on Multi-modal Foundation Model meets Embodied AI

Track 1

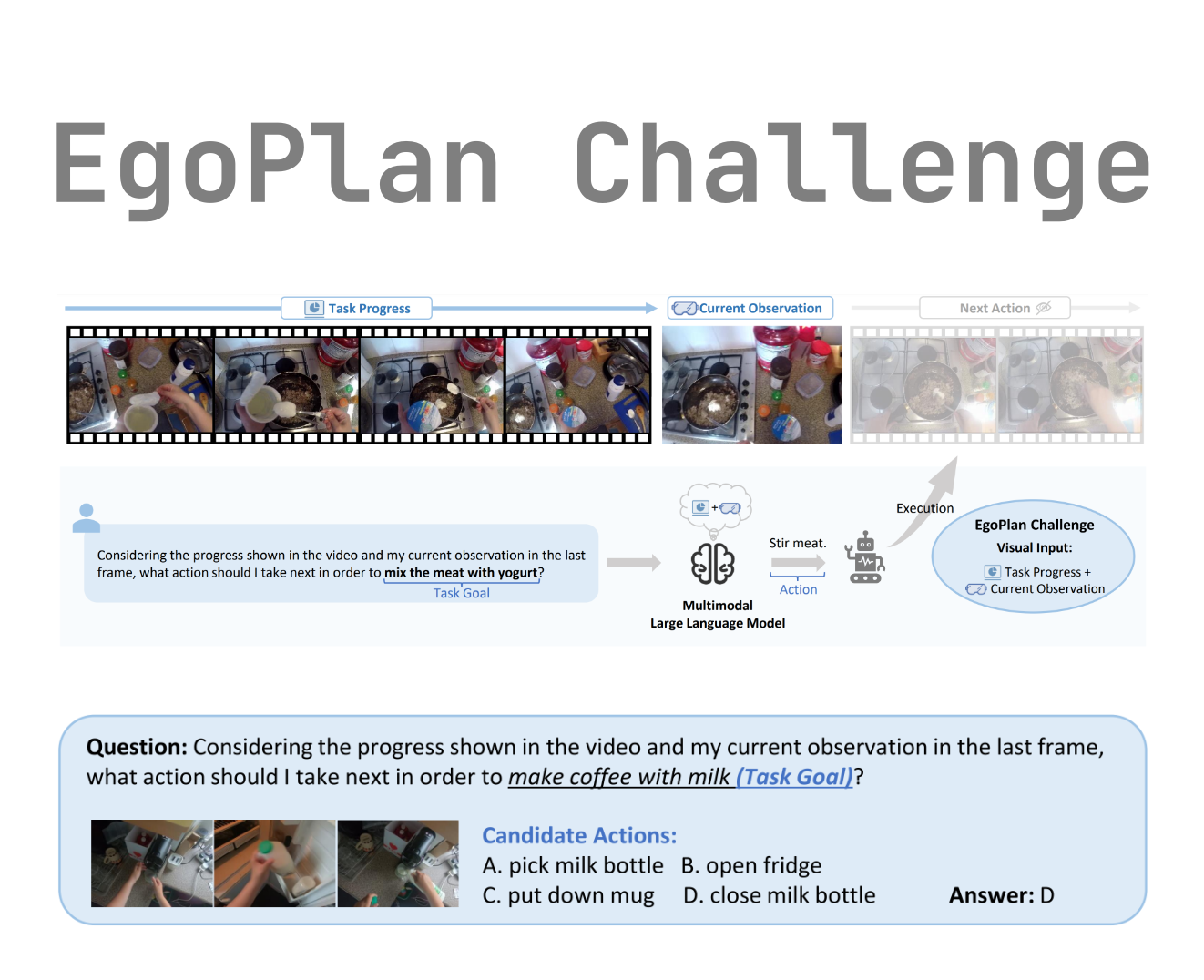

EgoPlan Challenge

The EgoPlan Challenge aims to evaluate the potential of Multimodal Large Language Models (MLLMs) as embodied task planners in real-world scenarios. In this challenge, MLLMs are required to predict the next feasible action given a real-time task progress video, current visual observation, and an open-form language instruction as inputs. Sourced from egocentric videos of everyday household activities, the EgoPlan Challenge features realistic tasks, diverse actions, and complex real-world visual observations.

Track 2

Composable Generalization Agent Challenge

The Composable Generalization Challenge focuses on exploring the capabilities of visual language models (VLMs) for high-level planning in embodied tasks that involve robotic manipulation. Through endowing the embodied agents with the ability to break down novel physical skills like “wipe”, “throw” and “receive” into constituent primitive skills, mitigating the unpredictability and intricacy when expanding the abilities of agents to novel physical skills. The challenge includes a variety of complex tasks, and each demonstration episode is clipped by the meticulously-designed primitive skills.

Details will be released in July...

Track 3

World Model Challenge

The World Model Challenge aims to evaluate the potential of World Simulator as embodied task planners and sequential decision makers in real-world scenarios. In this challenge, World Simulators are required to generate a video of an embodied scene based on the given open-form language instructions and the available history image observation frames. The task scenarios come from different embodied tasks such as robotic arms, automatic driving, etc. The generated video will be thoroughly evaluated through special embodied intelligence metrics, including physical rules and motion trajectories, to verify the embodied capabilities of the video.

Details will be released in July...

Track 1

EgoPlan Challenge

Task Description

The EgoPlan Challenge aims to evaluate the planning capabilities of Multimodal Large Language Models (MLLMs) in real-world scenarios with diverse environmental inputs. Participants must utilize MLLMs to predict the next feasible action based on real-time task progress videos, current visual observations, and open-form language instructions.

Participation

From now until July 1, 2024, participants can register for this challenge by filling out the Google Form. After the test set is released, results can be submitted via the test server since June 1, 2024. Please follow the challenge website and the GitHub repository for updates. We will also keep you updated on the challenge news through the email address you provided in the Google Form.

Award

| Outstanding Champion | USD $800 |

| Honorable Runner-up | USD $600 |

| Innovation Award | USD $600 |

Contact

Related Literature

Track 2

Composable Generalization Agent Challenge

Details will be released in July...

Track 3

World Model Challenge

Details will be released in July...

Frequently Asked Questions

Can we submit a paper that will also be submitted to NeurIPS 2024?

Yes.

Can we submit a paper that was accepted at ICLR 2024?

No. ICML prohibits main conference publication from appearing concurrently at the workshops.

Will the reviews be made available to authors?

Yes.

I have a question not addressed here, whom should I contact?

Email organizers at icml-tifa-workshop@googlegroups.com

ICML MFM-EAI Workshop

ICML MFM-EAI Workshop